NestJS is a powerful framework for developing efficient server-side applications. It supports TypeScript, dependency injection, modular architecture, and much more.

However, this build process also generates some files that are not needed for running the application, such as source maps, development dependencies, and configuration files. These files can increase the size of your Docker image, which can affect the performance and security of your application. For example, a larger image can take longer to download and start, consume more disk space and memory, and expose more attack surface to potential hackers.

We'll follow the same approach we used for our Next.js application in a previous guide - utilizing multi-stage builds!

# Ok, so what does a normal Dockerfile for NestJS look like?

Like this! But don't just copy-paste this, as we need to work on this a bit first.

dockerfileFROM node:18-alpine WORKDIR /app COPY package*.json ./ RUN npm install COPY . . RUN npm run build CMD ["node", "dist/main.js"]

This will:

- Download the Node.js 18 image

- Copy over your

package.jsonandpackage-lock.jsonfiles - Install your dependencies

- Copy over the rest of your files

- Build your NestJS application

- Start the production server

And this will get the job done just fine. You can actually test that this works by running:

bashdocker build -t nestjs-api .

However, NestJS doesn't need all these files to run. In fact, most of your source code is just completely unused past the build step. Not to mention all those locally installed dependencies! Don't believe me? Run this in your terminal to get the size of the image you've just built:

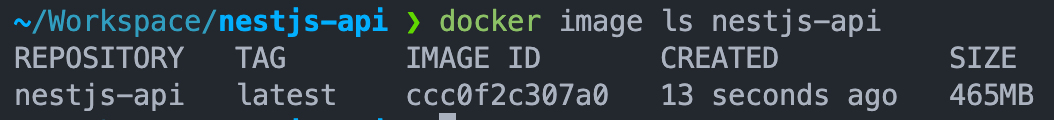

bashdocker image ls nestjs-api

That's 465MB for the simplest NestJS application with no other dependencies or code!

# Cool. So what can we do about this? 🤔

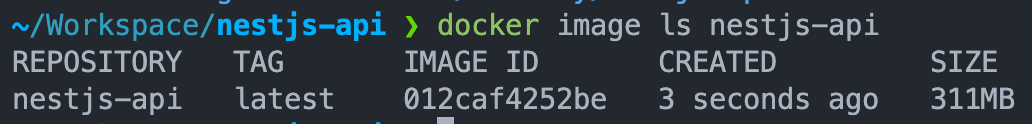

First off, we can tell Docker to ignore copying over certain files or folders on your filesystem when building the image. This is great not only for keeping your Docker image deterministic, but also to avoid system architecture mismatches from locally installed dependencies. Create a new file called .dockerignore and type this in it:

Dockerfile .dockerignore node_modules npm-debug.log README.md dist .git

Now, rebuild your Docker image, and just like that we're already down to 311MB!

# That still feels like a lot...

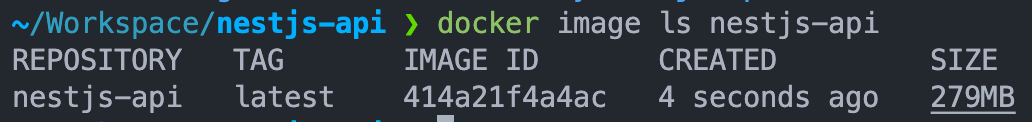

Thankfully, multi-stage builds exist! These will enable your NestJS Dockerfile to create a smaller and more efficient Docker image that contains only the files that are necessary for running your application. This can improve the performance and security of your application, as well as make it easier (and faster) to deploy and update.

Let's fix our previous Dockerfile:

dockerfileFROM node:18-alpine AS builder WORKDIR /app COPY package*.json ./ RUN npm install COPY . . RUN npm run build FROM node:18-alpine AS runner WORKDIR /app COPY --from=builder /app/dist ./dist COPY --from=builder /app/node_modules ./node_modules CMD ["node", "dist/main.js"]

Here's what changed:

- We've labeled our first image

builder. This means we can now reference it in a future stage without redoing all of the steps. - We've added a second stage called

runner. This is the one that actually ends up in the bundle. - Then, we copy over just the files we need for our NestJS application to run. That's our

distandnode_modulesfolders. - Finally, we run the production server!

Rebuilding our Docker image shows that we managed to trim off just a bit over 30MB more!

# That's still not enough! 😤

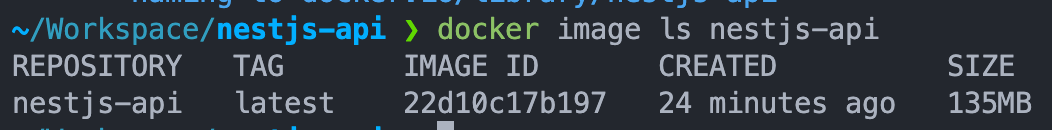

Indeed. Let's see what we can do to reduce this even further.

- Instead of

npm install, let's usenpm ci, the recommended way of running clean installs in Continuous Integration environments.

dockerfileCOPY package*.json ./ RUN npm ci

- Some npm packages actually have micro-optimizations built-in to them when they notice that the

NODE_ENVenvironment variable is set toproduction. Let's add that in too.

dockerfileENV NODE_ENV production

- After building our shippable code, let's re-run the installation process, but instruct it to only fetch the production dependencies. This is because, as per their design,

devDependenciesare supposed to be used for development, linting, testing, etc., and are not intended for their code to be used in production.

dockerfileRUN npm run build RUN npm ci --only-production

- Then, once we're done installing our dependencies, we actually no longer need the npm cache, so we can safely get rid of it.

dockerfileRUN npm run build RUN npm ci --only-production && npm cache clean --force

- For bonus points, you can opt to use the built-in Node.js user instead of the default root user for running your app. This doesn't have a performance benefit, but it avoids some serious security risks. We can add right before running our image, but let's first make sure the

nodeuser has the appropriate permissions to run our code.

dockerfileCOPY --from=builder --chown=node:node /app/dist ./dist COPY --from=builder --chown=node:node /app/node_modules ./node_modules USER node CMD ["node", "dist/main.js"]

# Let's put it all together.. wait, I don't use npm..😬

I get you, I love me some pnpm in my life. But most guides I've read pretty much only cover npm or yarn. And then you gotta spend the time to switch the Dockerfile commands to your package manager of choice.

No more. What if we had a "catch-all" Dockerfile instead, which adapts to use npm, yarn, or pnpm, based on what kind of lockfile you have in your project root?

Try this one on for a size:

dockerfileFROM node:18-alpine AS deps WORKDIR /app # Copy only the files needed to install dependencies COPY package.json yarn.lock* package-lock.json* pnpm-lock.yaml* ./ # Install dependencies with the preferred package manager RUN \ if [ -f package-lock.json ]; then npm ci; \ elif [ -f yarn.lock ]; then yarn --frozen-lockfile; \ elif [ -f pnpm-lock.yaml ]; then corepack enable pnpm && pnpm i --frozen-lockfile; \ else echo "Lockfile not found." && exit 1; \ fi FROM node:18-alpine AS builder WORKDIR /app COPY --from=deps /app/node_modules ./node_modules # Copy the rest of the files COPY . . # Run build with the preferred package manager RUN \ if [ -f package-lock.json ]; then npm run build; \ elif [ -f yarn.lock ]; then yarn build; \ elif [ -f pnpm-lock.yaml ]; then corepack enable pnpm && pnpm build; \ else echo "Lockfile not found." && exit 1; \ fi # Set NODE_ENV environment variable ENV NODE_ENV production # Re-run install only for production dependencies RUN \ if [ -f package-lock.json ]; then npm ci --only=production && npm cache clean --force; \ elif [ -f yarn.lock ]; then yarn --frozen-lockfile --production && yarn cache clean; \ elif [ -f pnpm-lock.yaml ]; then corepack enable pnpm && pnpm i --frozen-lockfile --prod; \ else echo "Lockfile not found." && exit 1; \ fi FROM node:18-alpine AS runner WORKDIR /app # Copy the bundled code from the builder stage COPY --from=builder --chown=node:node /app/dist ./dist COPY --from=builder --chown=node:node /app/node_modules ./node_modules # Use the node user from the image USER node # Start the server CMD ["node", "dist/main.js"]

Let's build our code one final time and check the image size:

Marvelous - that's more than a 3.5x reduction in total image size!

And that should do it! In the next guides in this series, we'll talk about creating a docker-compose.yaml file for locally working on your NestJS application, adding Turborepo integration for use with our previously created Next.js application, and explore authentication options such as Social Login and Single Sign On.

Until next time — happy coding!